AI Models are the core systems that power modern artificial intelligence. An AI model is a trained algorithm that learns patterns from data and uses them to make predictions, generate content, recognise images, understand language, or automate decisions. From chatbots and search engines to medical diagnosis, finance, marketing, and autonomous systems, AI models transform raw data into intelligent actions. What makes them powerful is not just speed, but their ability to improve over time through machine learning, deep learning, and continuous evaluation. Today’s most advanced AI models are built on large datasets, refined with training techniques, and tested for accuracy, safety, and real-world reliability.

What sets AI models apart is how they adapt to different tasks and industries. Some models specialise in language (NLP), others in vision, speech, recommendation systems, or predictive analytics. Businesses use AI models to personalise customer experiences, optimise operations, detect fraud, and gain strategic insights, while developers rely on them to build scalable, intelligent applications. As AI becomes more integrated into everyday technology, understanding how AI models are trained, evaluated, and applied is essential for anyone working in digital products, data science, marketing, healthcare, or automation. In this guide, you will learn what AI models are, how they work, and why they are reshaping the future of technology.

Why Accuracy and Safety Matter in AI Systems

AI models are used in real-world decisions that affect people’s health, money, education, and privacy. If an AI system gives incorrect or biased information, the consequences can be serious. This is why companies rely on structured AI content review processes before deployment.

Accuracy ensures:

- Answers are factually correct

- Predictions are reliable

- Outputs match user intent

Safety ensures:

- Harmful or illegal content is filtered

- Bias and discrimination are reduced

- Systems do not mislead, manipulate, or cause damage

AI without strong accuracy and safety checks can spread misinformation, reinforce unfair stereotypes, or make decisions that harm individuals and businesses. This is why human training and review are built into every responsible AI system.

Accuracy vs Safety in AI Systems

| Aspect | What It Means in AI | Why It Matters |

|---|---|---|

| Accuracy | AI provides factually correct, relevant, and consistent outputs | Prevents wrong diagnoses, financial errors, and misleading information |

| Safety | AI avoids harmful, illegal, biased, or unethical content | Protects users from discrimination, misinformation, and exploitation |

| Trust | Users rely on AI for important decisions | Builds long-term adoption and brand credibility |

| Compliance | AI follows laws, policies, and platform rules | Prevents legal risks and regulatory penalties |

| Reliability | AI performs well across real-world situations | Ensures stable and predictable system behaviour |

Who Is Responsible for Training AI Models

Training AI is not done by a single role. It is a structured process involving multiple specialists, each responsible for a different stage of quality and safety.

The key contributors include:

- Data Annotators

- AI Evaluators

- Machine Learning Engineers

- AI Safety Researchers

- Ethics and Compliance Teams

- Human-in-the-Loop Reviewers

Let’s look at what each of these roles does.

Key Roles in AI Training and Their Responsibilities

| Role | Main Responsibility | Impact on Accuracy | Impact on Safety |

|---|---|---|---|

| Data Annotators | Label and structure training data | Improves learning precision | Flags harmful or incorrect data |

| AI Evaluators | Test and score AI outputs | Identifies errors and hallucinations | Detects unsafe, biased, or harmful responses |

| Machine Learning Engineers | Build and optimise models | Enhances prediction performance | Implements filters and guardrails |

| AI Safety Researchers | Analyse risks and failure cases | Improves robustness | Prevents misuse and security vulnerabilities |

| Ethics & Compliance Teams | Set legal and ethical boundaries | Ensures responsible use | Enforces fairness and regulatory compliance |

| Human-in-the-Loop Reviewers | Monitor and correct real-world outputs | Refines performance |

Data Annotators

Data annotators are the first people involved in training an AI system. They label raw data so the model can learn patterns. This includes marking images, classifying text, transcribing audio, and tagging meaning in sentences.

For example:

- They label objects in images (“car,” “person,” “traffic light”)

- They classify text as positive, negative, or neutral

- They tag harmful content, misinformation, or policy-breaking language

Without high-quality labelled data, an AI model cannot learn accurately. If the labels are inconsistent or biased, the model will reflect those errors. This process directly supports the role explained in The Role of Data Labelling Evaluators

What data annotators do:

- Label images, text, audio, and video

- Identify correct vs incorrect outputs

- Flag harmful or inappropriate content

- Create training datasets for supervised learning

They form the foundation of AI accuracy.

AI Evaluators

Once a model is trained, it must be tested. This is where AI evaluators step in. Their job is to assess the outputs produced by the AI and decide whether they are accurate, relevant, safe, and useful.

They evaluate:

- Factual correctness

- Clarity and completeness

- Bias or harmful language

- Compliance with safety guidelines

AI evaluators often compare multiple AI responses and choose the best one. This feedback is then fed back into the system to improve future results.

What AI evaluators do:

- Review AI responses to real user queries

- Score answers for accuracy and safety

- Identify hallucinations and misleading claims

- Report patterns of bias or unsafe behaviour

This role is critical for preventing AI from releasing wrong or dangerous information.

Machine Learning Engineers

Machine learning engineers design the algorithms that power AI systems. They select model architectures, tune parameters, and integrate training data into functional systems.

They are responsible for:

- Designing training pipelines

- Optimising performance

- Reducing error rates

- Applying reinforcement learning techniques

Engineers rely on feedback from annotators and evaluators to adjust how models learn. If evaluators report recurring mistakes, engineers modify the training process to correct them.

What ML engineers do:

- Train models using labelled datasets

- Improve prediction accuracy

- Implement safety filters

- Monitor performance metrics

They ensure the AI behaves consistently and improves over time.

AI Safety Researchers

AI safety researchers focus on how systems can fail and how to prevent those failures. They study issues like:

- Misinformation

- Algorithmic bias

- Prompt manipulation

- Security vulnerabilities

- Misuse of AI tools

Their work helps organisations anticipate risks before AI is widely deployed. Their work supports safe deployment practices described in AI Content Review Before Deployment

What AI safety researchers do:

- Stress-test AI systems with edge cases

- Analyse harmful outputs

- Design guardrails and safety policies

- Develop mitigation strategies for misuse

They play a central role in making AI robust, responsible, and aligned with human values.

Ethics and Compliance Teams

Ethics teams set the rules that guide AI behaviour. They ensure systems follow legal, cultural, and organisational standards. This includes compliance with:

- Data protection laws

- Anti-discrimination policies

- Industry regulations

- Content moderation guidelines

These teams decide what the AI should not generate, such as:

- Hate speech

- Medical or legal advice without disclaimers

- Violent or exploitative content

What ethics teams do:

- Create AI usage policies

- Define acceptable and restricted content

- Review training practices for fairness

- Audit AI systems for regulatory compliance

They make sure AI development respects human rights and social responsibility.

Human in the Loop Reviewers

Even after deployment, AI systems are not left alone. Human in the loop reviewers monitor outputs, handle edge cases, and intervene when something goes wrong.

This process ensures that:

- New risks are identified

- Errors are corrected quickly

- Models improve with real-world feedback

What human reviewers do

- Review flagged AI outputs

- Correct incorrect responses

- Feed improvements back into the system

- Monitor long-term performance trends

This ongoing oversight keeps AI aligned with user needs and safety standards.

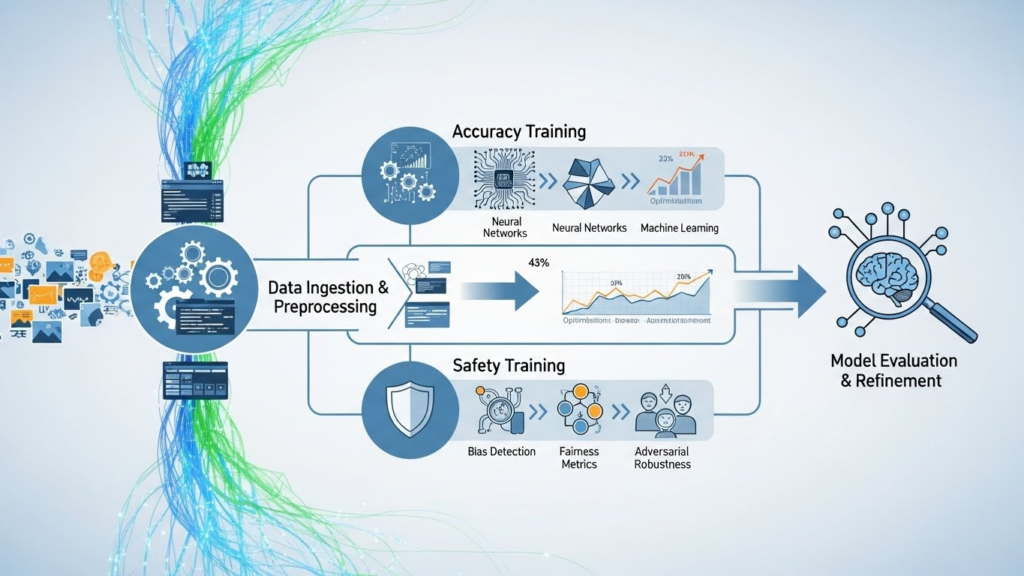

How AI Models Are Trained for Accuracy and Safety

AI training follows a structured workflow. Each stage is designed to reduce errors and prevent harm.

Step 1: Data Collection

Large datasets are gathered from text, images, videos, and audio sources. These datasets must be diverse and representative to avoid bias.

Step 2: Data Labelling

Data annotators label and classify the data so the model can learn from it.

Step 3: Model Training

Machine learning engineers train the model using the labelled data. The model learns to predict outcomes based on patterns.

Step 4: Evaluation and Testing

AI evaluators test the system using real-world scenarios. Outputs are scored for correctness, clarity, and safety.

Step 5: Bias and Safety Review

Safety researchers and ethics teams review the system for harmful patterns, unfair treatment, or regulatory issues.

Step 6: Human Feedback Integration

Human-in-the-loop reviewers provide ongoing corrections that improve performance over time.

Step 7: Continuous Monitoring

After release, performance is tracked and updates are applied when new risks or errors appear.

Roles in the AI Training Process

| Stage | Who Is Involved | Purpose |

|---|---|---|

| Data labelling | Data Annotators | Teach the model what data means |

| Model training | ML Engineers | Build and optimise the AI |

| Output testing | AI Evaluators | Check accuracy and relevance |

| Safety audits | AI Safety Researchers | Identify risks and misuse |

| Policy review | Ethics Teams | Ensure legal and ethical compliance |

| Post-launch review | Human-in-the-Loop Reviewers | Monitor and improve performance |

This collaborative structure ensures that no single group controls AI behaviour alone.

What Does Human in the Loop Mean in AI

Human-in-the-loop (HITL) means that people remain involved in decision-making rather than allowing AI to operate independently.

In practice, this means:

- Humans approve or reject AI outputs

- Humans correct errors and bias

- Humans handle complex or sensitive cases

HITL is essential in:

- Healthcare AI

- Financial systems

- Content moderation

- Autonomous systems

It ensures AI remains accountable to human judgement.

How Companies Prevent Bias and Misinformation

Bias and misinformation are two of the biggest risks in AI. To control them, organisations use several strategies:

- Diverse training data to reduce cultural or demographic bias

- Multiple human reviewers to avoid one-sided judgement

- Bias audits to test for unfair treatment across groups

- Safety filters to block harmful content

- Feedback loops to correct mistakes in real time

These practices ensure that AI outputs are not just accurate, but also fair and socially responsible.

Is Training AI a Real Job

Yes. AI training is a fast-growing career field. Roles include:

- Data Annotator

- AI Evaluator

- Machine Learning Engineer

- AI Safety Analyst

- Ethics and Compliance Officer

These professionals work in technology companies, research labs, healthcare, finance, and government. For career entry paths, see How to Become an Expert AI Model Evaluation Reviewer

Common skills required:

- Critical thinking

- Attention to detail

- Understanding of AI systems

- Knowledge of data ethics

- Communication and analytical skills

For many people, roles like AI evaluation and annotation offer entry points into the AI industry without requiring advanced programming.

Why AI Still Needs Humans

Despite advances in automation, AI cannot:

- Understand social context the way humans do

- Judge moral or ethical situations independently

- Detect subtle bias without guidance

- Take responsibility for harmful outcomes

Humans provide:

- Contextual understanding

- Ethical reasoning

- Accountability

- Continuous learning from real-world feedback

This is why every trustworthy AI system depends on human oversight.

Real World Examples of AI Training for Safety

Healthcare

Medical AI tools are reviewed by doctors and clinical experts to ensure diagnoses are accurate and do not create harmful recommendations.

Finance

Fraud detection systems are audited by analysts to prevent false positives that could block legitimate transactions.

Content Moderation

Human reviewers assess flagged content to prevent hate speech, misinformation, and harmful material from spreading.

Autonomous Systems

Self-driving technology is tested by safety engineers who simulate thousands of edge cases before deployment.

In each case, AI supports decisions but humans remain responsible.

Common Misconceptions About AI Training

AI trains itself completely.

AI learns from data, but humans design the training process and correct mistakes.

Once trained, AI is finished.

AI systems require continuous monitoring and updates to remain safe.

AI is unbiased by default.

AI reflects the data it is trained on. Human oversight is needed to detect and correct bias.

Safety reduces performance.

In reality, safety improves trust, adoption, and long-term system reliability.

Conclusion

Who trains AI models for accuracy and safety is not a single role or organisation it is a layered system of data annotators, AI trainers, evaluators, engineers, policy experts, and independent reviewers working together. From collecting and labelling data to testing outputs for bias, hallucinations, and harmful behaviour, each group plays a critical role in shaping how reliable and trustworthy an AI system becomes. Accuracy comes from high-quality training data and continuous model evaluation, while safety depends on strict guidelines, red-teaming, and real world monitoring.

As AI systems become more integrated into healthcare, finance, education, and daily decision-making, this human oversight becomes even more important. Well-trained AI is not just about performance it is about responsibility. The future of AI will be defined not only by better algorithms, but by how seriously organisations invest in ethical training, transparent evaluation, and long-term governance to ensure AI remains safe, fair, and aligned with human values.

FAQs

1.Who is responsible for training AI models?

AI models are trained by a combination of machine learning engineers, data scientists, data annotators, AI trainers, and evaluators. In addition, ethics teams, legal experts, and external auditors help ensure safety and compliance.

2.What is the role of data annotators in AI training?

Data annotators label text, images, audio, and video so AI models can learn patterns accurately. Their work directly affects how well the model understands language, objects, intent, and real-world scenarios.

3.Who checks if an AI model is safe before release?

AI evaluators, red team specialists, and policy reviewers test the system for bias, misinformation, harmful content, and misuse. Many companies also use third-party audits to validate safety standards.

4.How do companies ensure AI accuracy?

Accuracy is improved through high-quality training data, continuous testing, human feedback loops, error analysis, and regular model updates based on real-world performance.

5.What is AI red teaming?

Red teaming is when specialists intentionally try to break or misuse an AI system to uncover vulnerabilities, safety risks, or ethical failures before the product reaches users.

6.Are governments involved in AI safety?

Yes. Governments and regulatory bodies create AI governance frameworks, compliance standards, and data protection laws that guide how AI systems must be trained, tested, and deployed.

7.Can AI models be trained without humans?

No. While algorithms learn automatically, humans are essential for data preparation, evaluation, ethical oversight, bias detection, and deciding what the AI is allowed or not allowed to do.

8.Why is human oversight important in AI training?

Human oversight ensures AI systems remain accurate, unbiased, and aligned with social and legal norms. Without it, models can produce harmful, misleading, or discriminatory outputs.