Artificial intelligence relies heavily on high-quality data to perform accurately and effectively. Even minor errors or inconsistencies in training data can lead to flawed AI outputs, which can affect decision-making, predictions, and user experience. That’s where AI training task evaluator tools come in they help ensure data quality, verify labelling accuracy, and identify mistakes before they impact AI performance. These tools are crucial for businesses, developers, and AI teams aiming to build reliable and unbiased AI models.

From checking annotation quality to assessing task performance and monitoring bias, AI training task evaluator tools streamline the validation process. They provide actionable insights, automate repetitive checks, and maintain consistency across large datasets. By using these tools, organizations can save time, reduce risks, and improve the overall accuracy of AI models, making them an essential part of any AI development workflow.

What Are AI Training Task Evaluator Tools?

AI training task evaluator tools are systems, platforms, or services used to review and validate AI training data and tasks. Their main purpose is to ensure that the data used to train AI models is correct, consistent, unbiased, and aligned with project requirements.

These tools evaluate tasks such as:

- Data labeling and annotation

- Search relevance judgments

- Content moderation decisions

- Image, video, audio, and text classification

- Model output validation

Evaluation can be fully automated, human-driven, or hybrid (human-in-the-loop). Most high-accuracy AI systems rely on a hybrid approach, where automated systems handle scale and humans handle judgment.

Why Data Accuracy Matters in AI Training

Data accuracy directly affects how an AI model learns and performs in real-world scenarios. Poor data quality leads to unreliable results, user dissatisfaction, and even ethical or legal risks.

Key reasons accuracy is critical:

- Better model performance: Clean data leads to higher precision and recall.

- Reduced bias: Accurate evaluation helps identify skewed or unfair data.

- Lower retraining costs: Fixing errors early is cheaper than retraining models.

- Improved trust: Reliable AI builds confidence among users and stakeholders.

In short, accurate training data is the foundation of responsible and scalable AI.

How AI Training Tasks Are Evaluated

AI training task evaluation usually follows a structured process:

- Task review: Check if instructions are clear and consistent

- Accuracy validation: Confirm labels or judgments are correct

- Bias detection: Identify cultural, gender, or regional bias

- Consistency checks: Ensure similar tasks receive similar judgments

- Feedback loop: Improve guidelines and future tasks

Common AI Training Tasks and Evaluation Focus

| Training Task Type | What Is Evaluated | Accuracy Impact |

|---|---|---|

| Text annotation | Label correctness, intent match | High |

| Image labeling | Object accuracy, bounding boxes | High |

| Audio transcription | Word accuracy, timestamps | Medium–High |

| Search relevance | Query–result alignment | High |

| Content moderation | Policy compliance | High |

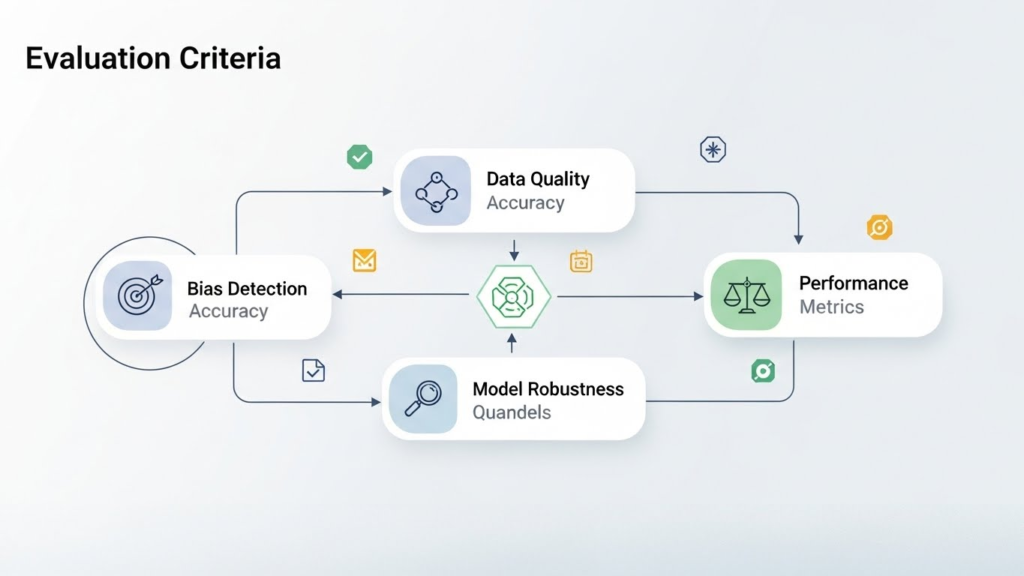

Evaluation Criteria Used by AI Training Task Evaluator Tools

Before choosing or ranking evaluator tools, it is important to understand the criteria that matter most.

Core evaluation factors:

- Accuracy measurement methods

- Human-in-the-loop support

- Scalability for large datasets

- Bias and fairness checks

- Security and data privacy

- Clear reporting and quality metrics

Tools that perform well across these areas are more reliable for long-term AI projects. For practical insights, see our article on how a performance scoring evaluator boosts employee productivity.

Top 10 AI Training Task Evaluator Tools for Data Accuracy

Below is a curated list of evaluator tools and platforms commonly used in AI training workflows. The focus is on accuracy, reliability, and evaluation quality, not marketing hype.

1. Human in the Loop Evaluation Platforms

Human-in-the-Loop Evaluation Platforms combine human judgment with AI systems to improve accuracy and reliability. These platforms allow real people to review, validate, and correct AI outputs where automation alone may fall short. By adding human feedback into the evaluation process, organizations can reduce errors, improve data quality, and build more trustworthy AI models.

Best for: NLP, search evaluation, content review

Accuracy benefit: Human judgment reduces edge-case errors

2. Quality Assurance

Quality Assurance (QA) is the process of ensuring that products, services, or systems meet defined quality standards before they reach the end user. It focuses on preventing errors by improving processes, workflows, and evaluation methods. Through regular checks and clear guidelines, QA helps maintain accuracy, reliability, and overall performance.

Best for: Large-scale annotation projects

Accuracy benefit: Detects inconsistencies and labelling mistakes

3. Consensus-Based Evaluation Tools

Consensus-Based Evaluation Tools are systems that assess data accuracy by combining feedback from multiple reviewers instead of relying on a single opinion. These tools compare different evaluations to find common agreement, which helps reduce individual bias and errors. By using collective judgment, they deliver more reliable, balanced, and trustworthy evaluation results.

Best for: High-risk or subjective data

Accuracy benefit: Reduces individual evaluator bias

4. Bias Detection and Fairness Tools

Bias Detection and Fairness Tools are designed to identify and reduce unfair patterns in AI models and datasets. These tools analyze data, algorithms, and outputs to detect bias related to gender, race, location, or other sensitive factors. By improving transparency and balance, they help ensure AI systems produce fair, ethical, and reliable results.

Best for: Ethical AI projects

Accuracy benefit: Improves fairness and inclusivity

5. Automated Validation Engines

Automated Validation Engines are systems that automatically check data, tasks, or outputs against predefined rules and quality standards. They help identify errors, inconsistencies, and missing information without manual review. By improving speed and accuracy, these engines ensure reliable results and maintain high data quality at scale.

Best for: Structured data and repetitive tasks

Accuracy benefit: Fast detection of obvious errors

6. Model Output Evaluation Tools

Model Output Evaluation Tools are software or frameworks used to assess the performance and accuracy of AI models. They help measure how well a model’s predictions align with expected results, ensuring reliability and quality. By providing insights into errors, biases, and strengths, these tools guide improvements and optimize model performance.

Best for: Model fine-tuning and validation

Accuracy benefit: Direct feedback on model learning quality

7. Annotation Review Dashboards

Annotation Review Dashboards are tools designed to help teams monitor, evaluate, and manage data labelling tasks efficiently. They provide a clear overview of annotation progress, quality metrics, and reviewer feedback in one centralized interface. By using these dashboards, organizations can ensure consistent data quality, identify errors quickly, and streamline the review process.

Best for: Ongoing AI training projects

Accuracy benefit: Continuous improvement

8. Sampling-Based Evaluation Tools

Sampling-Based Evaluation Tools are used to assess the quality of large datasets by analyzing a smaller, representative subset of the data. These tools help identify errors, inconsistencies, or biases without the need to review the entire dataset. By focusing on sampled data, they save time and resources while still providing reliable insights for decision-making.

Best for: Very large datasets

Accuracy benefit: Cost-effective quality assurance

9. Expert Reviewer Networks

Expert Reviewer Networks are communities of skilled professionals who evaluate products, services, or content to ensure quality and accuracy. These networks provide reliable, expert feedback that helps companies improve offerings and make informed decisions. By leveraging their specialized knowledge, businesses gain trust, credibility, and valuable insights from industry experts.

Best for: Medical, legal, or technical AI

Accuracy benefit: High domain-specific precision

10. Hybrid AI + Human Evaluation Systems

Hybrid AI + Human Evaluation Systems combine the efficiency of artificial intelligence with the judgment of human evaluators to ensure high-quality results. AI handles large-scale data processing and pattern recognition, while humans review and validate complex or nuanced tasks. This collaboration improves accuracy, reduces errors, and enhances decision-making across various applications.

Best for: Enterprise-level AI training

Accuracy benefit: Balanced speed and judgment

Comparison of AI Training Task Evaluator Tool Types

| Tool Type | Human Involvement | Scalability | Accuracy Level |

|---|---|---|---|

| Automated validation | Low | Very high | Medium |

| Human-only review | High | Low–Medium | Very high |

| Hybrid evaluation | Medium–High | High | Very high |

Human Evaluation vs Automated Evaluation

Both human and automated evaluations have strengths and limitations.

Automated Evaluation

Pros

- Fast and scalable

- Low cost per task

Cons

- Misses context

- Cannot judge ambiguity well

Human Evaluation

Pros

- Understands nuance and intent

- Better at subjective decisions

Cons

- Slower

- Higher cost

Best Practice

Most high-quality AI systems use human-in-the-loop evaluation, combining both approaches.

How Companies Ensure High-Quality AI Training Data

Leading AI companies follow structured evaluation strategies instead of relying on a single tool.

Common practices include:

- Clear annotation guidelines

- Multi-layer QA checks

- Regular evaluator training

- Bias audits

- Performance tracking

Organizations like Remote Online Evaluator apply these practices by combining trained human evaluators with structured quality workflows, helping ensure AI training tasks meet global accuracy standards.

How to Choose the Right AI Training Task Evaluator Tool

Choosing the right tool depends on your project goals and constraints.

Key questions to ask:

- What type of data am I evaluating?

- How critical is accuracy vs speed?

- Do I need human judgment?

- What are my compliance requirements?

- Can the tool scale with my data?

Tool Selection Based on Project Needs

| Project Need | Recommended Tool Type |

|---|---|

| Fast validation | Automated evaluation |

| High accuracy | Human or hybrid evaluation |

| Large datasets | Sampling-based tools |

| Sensitive data | Expert reviewer networks |

The Future of AI Training Task Evaluation

AI training evaluation is evolving rapidly. Future trends include:

- Real-time task quality scoring

- Smarter bias detection algorithms

- Better collaboration between humans and AI

- Stronger focus on ethical AI

As AI systems become more integrated into daily life, the demand for accurate, transparent, and fair evaluation will continue to grow.

Conclusion

AI training task evaluator tools are essential for building reliable and high-performing AI systems. They ensure that training data is accurate, unbiased, and aligned with real-world needs. From automated validation to expert human review, each evaluation method plays a role in improving AI outcomes.

By choosing the right combination of tools and processes, organizations can significantly improve data accuracy, reduce risks, and build AI systems that users can trust. In the long run, accurate evaluation is not just a technical requirement it is a competitive advantage.

FAQs

1. What are AI training task evaluator tools?

AI training task evaluator tools are software or platforms that help review, validate, and measure the accuracy of data used to train AI models. They ensure that the AI learns from high-quality, correct, and reliable data.

2. Why is data accuracy important in AI training?

Accurate data ensures that AI models make reliable predictions and decisions. Poor-quality or incorrect data can lead to biased, ineffective, or even harmful AI outputs.

3. How do AI task evaluator tools work?

These tools typically analyze datasets, check for errors, inconsistencies, duplicates, and bias, and provide feedback or scoring. Some tools also allow human evaluators to verify AI outputs for better accuracy.

4. Can these tools detect biased or misleading data?

Yes, many AI training task evaluator tools have features to detect bias, anomalies, and misleading patterns in datasets, helping to improve the fairness of AI models.

5. Are these tools suitable for all types of AI projects?

Most tools are versatile, but some are specialized for specific AI tasks like NLP (Natural Language Processing), computer vision, or recommendation systems. Choosing the right tool depends on your AI project type.

6. Do I need technical expertise to use these tools?

Some tools are beginner-friendly with a graphical interface, while others require technical knowledge. Many platforms provide tutorials or customer support for non-technical users.

7. Can these tools integrate with existing AI platforms?

Yes, many evaluator tools can integrate with popular AI platforms, machine learning frameworks, or data annotation pipelines to streamline data validation.

8. Do AI evaluator tools improve AI model performance?

Absolutely. By ensuring the data is accurate, clean, and unbiased, these tools help improve the overall performance, efficiency, and reliability of AI models.

9. Are these tools automated or manual?

Most modern AI training task evaluator tools offer a mix of automation and manual review. Automation handles bulk validation, while human evaluators verify complex or subjective tasks.

10. Where can I find the best AI training task evaluator tools?

Top tools are available online, and many offer free trials or demo versions. Some popular platforms include Appen, Scale AI, Amazon SageMaker Ground Truth, Labelbox, and Hive.